Stéphane d'Ascoli

Research Scientist

Meta AI, Paris

Biography

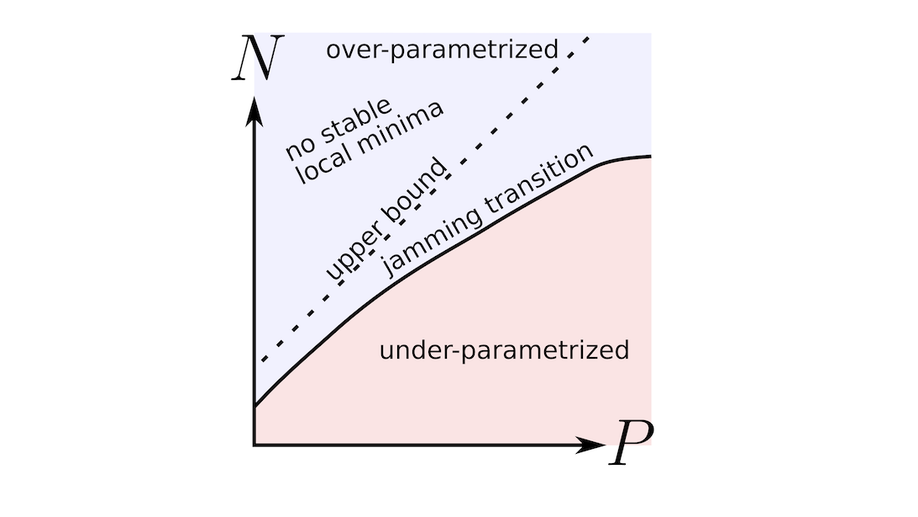

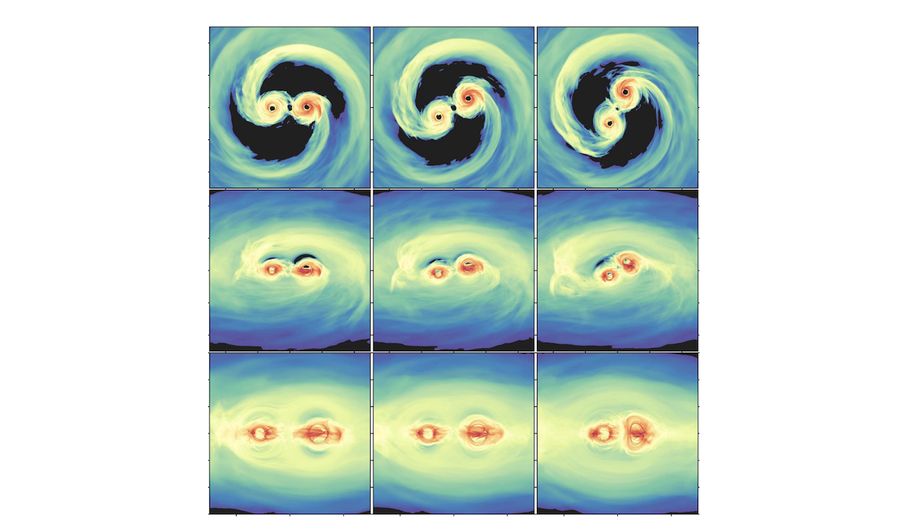

Hi! I’m a Research Scientist at Meta AI, working in the Brain & AI team. Previously, I was an AI4Science research fellow at EPFL, and completed a Ph.D. in deep learning, during which I shared my time between the Center for Data Science of ENS Paris and Facebook AI Research – you can find my thesis here. Prior to that, I studied Theoretical Physics at ENS Paris, and worked with NASA on black hole mergers. You can download my CV here.

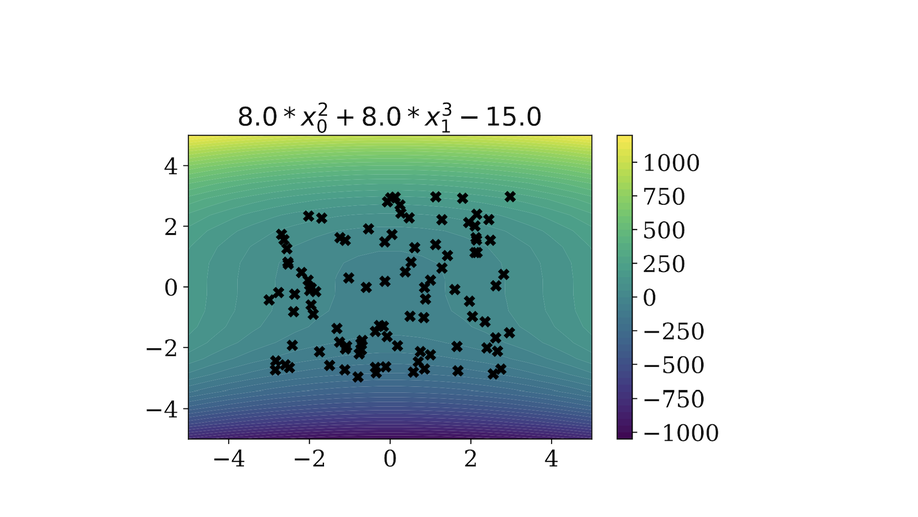

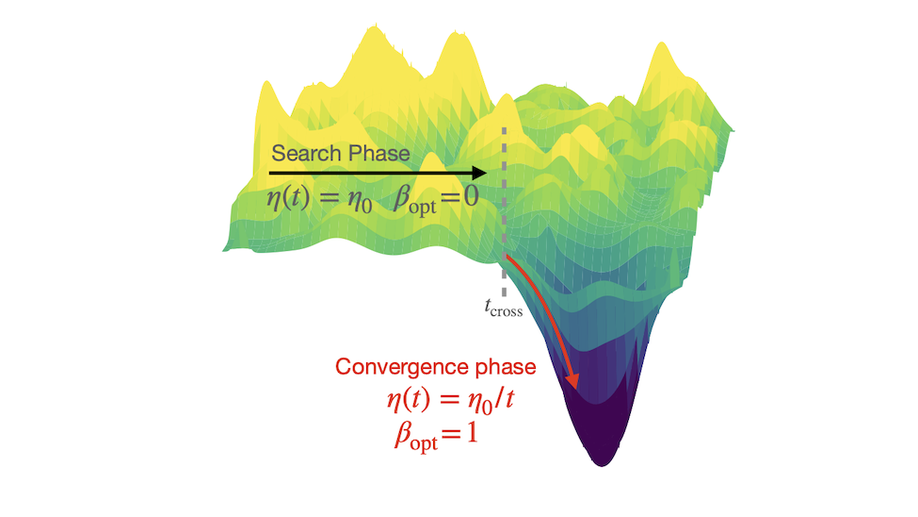

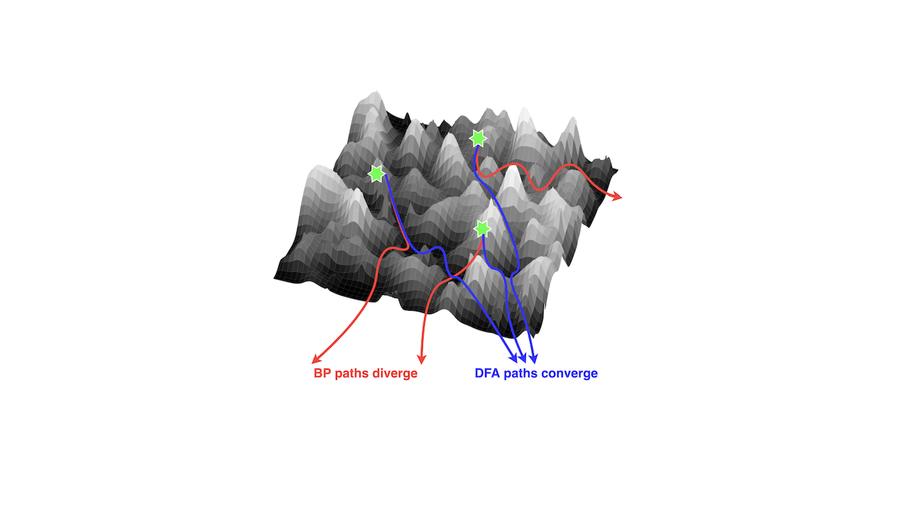

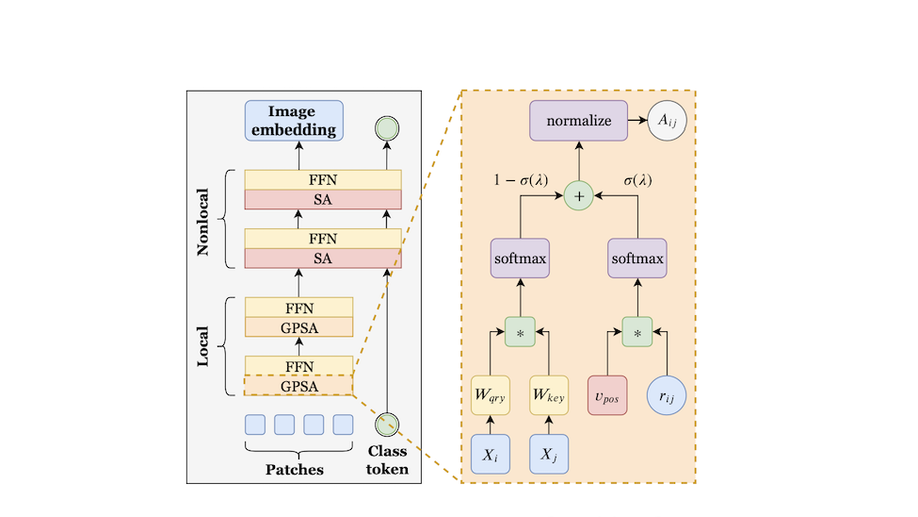

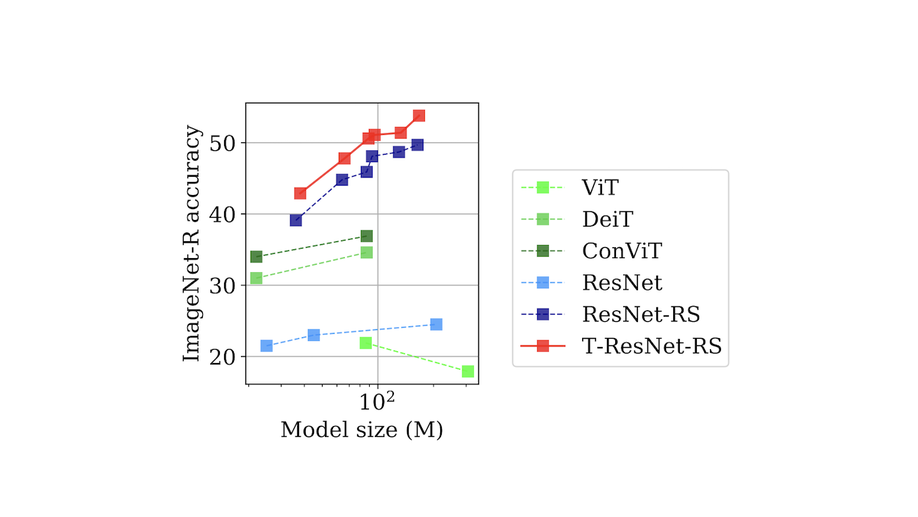

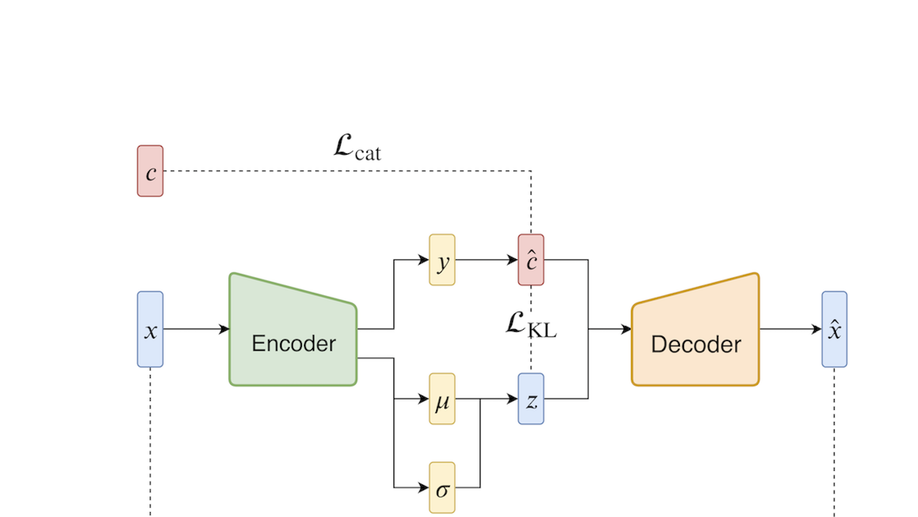

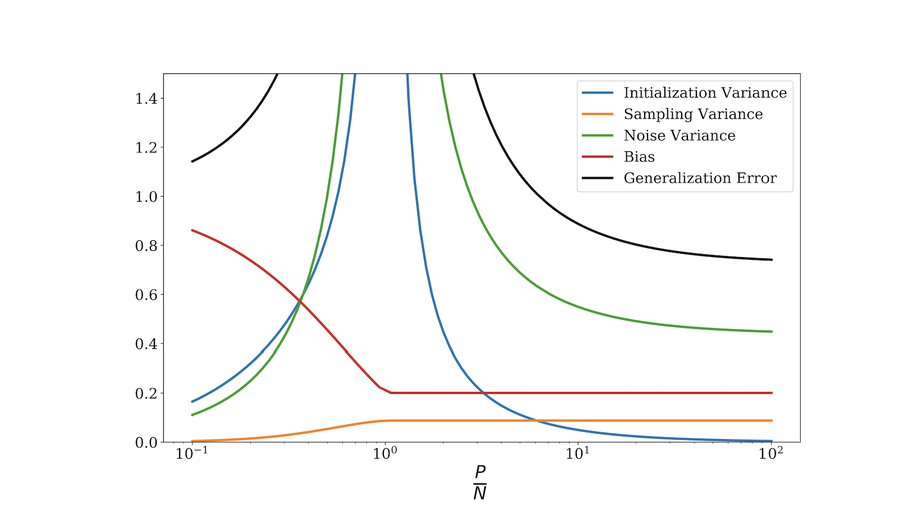

My current research focuses on decoding neural activity, with the aim of understanding better how the brain works, and perhaps one day help those who have difficulties to speak or type. I am also interested in understanding large neural networks and applying them to computer vision, symbolic regression and the natural sciences in general. Outside work, I love communicating about science (I wrote a few books for the general public), playing the clarinet and travelling very far on my bicycle!

Education

-

PhD in Artificial Intelligence, 2022

Ecole Normale Supérieure, Paris

-

Master's in Theoretical Physics, 2018

Ecole Normale Supérieure, Paris

-

Bachelor's in Physics, 2016

Ecole Normale Supérieure, Paris